TapSense

Validation level: 5. CHI, UIST, CSCW and TOCHI paper publication

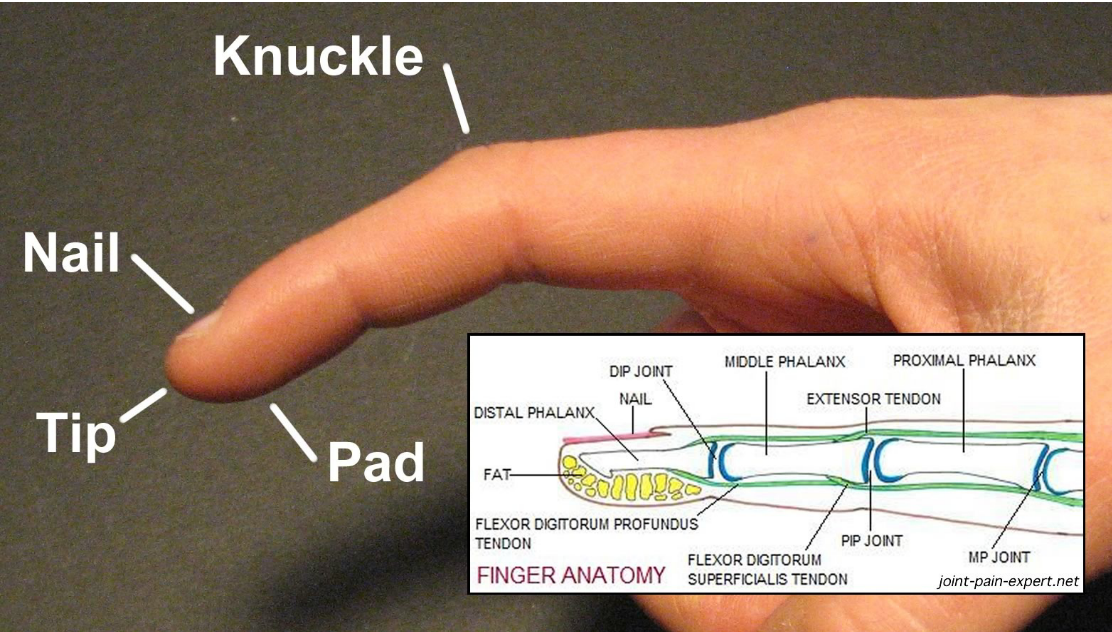

TapSense is an enhancement to touch interaction that allows conventional screens to identify how the finger is being used for input. This is achieved by segmenting and classifying sounds resulting from a finger’s impact. The system can recognize different finger locations – including the tip, pad, nail and knuckle – without the user having to wear any electronics.

Publications

Also featured in

Storyboard of Functions

TapSense can classify different types of finger input.

Different parts of the finger can be identified for use on touch surfaces

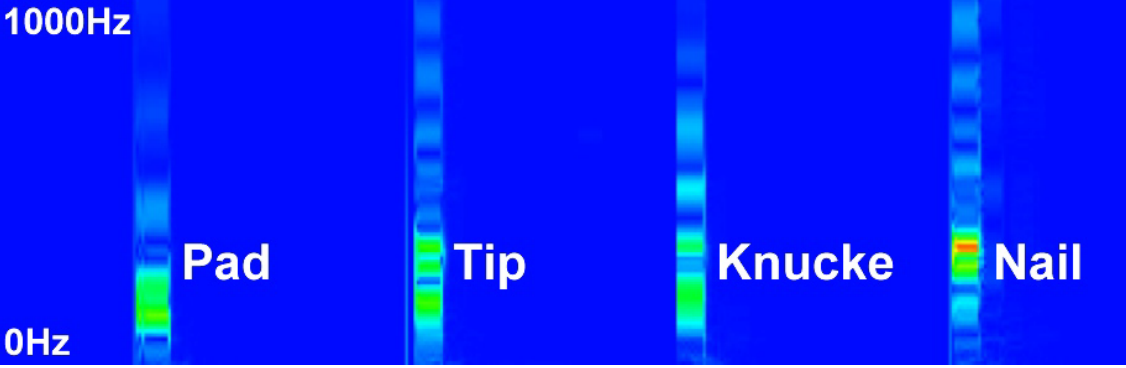

Spectrograms of our four finger input types. Once the audio data for the impact has been captured, our software processes it, extracting a series of time-independent acoustic features.

Having even a small set of input objects could be valuable. For instance, painting applications on conventional interactive surfaces typically use a palette-based color mode selection.

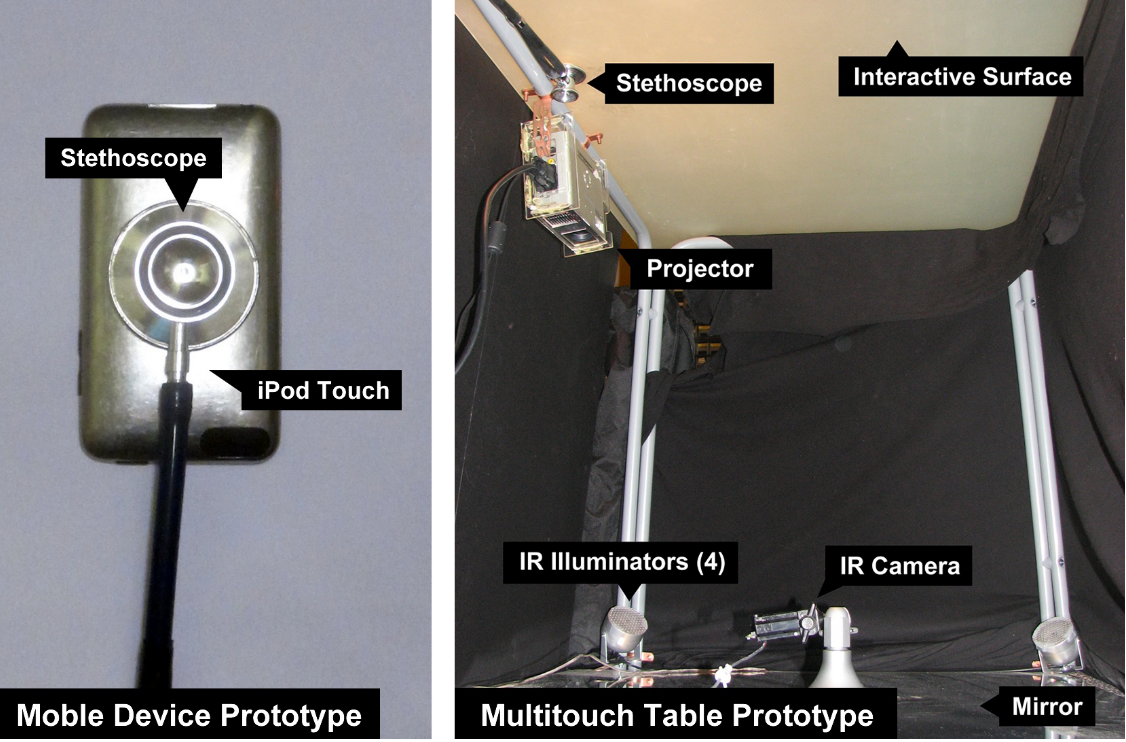

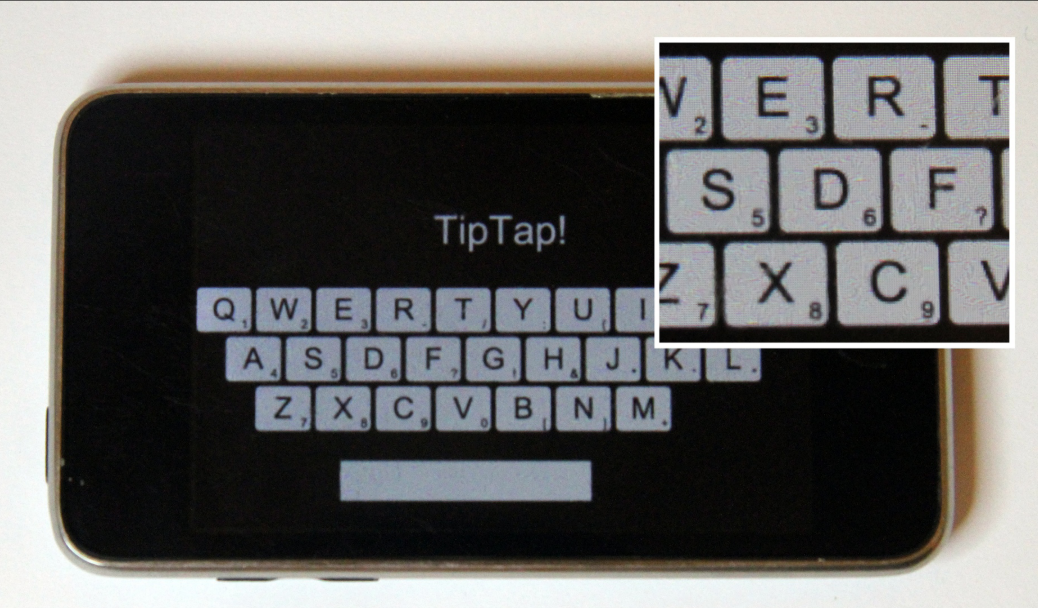

Mobile and Table prototype. Stethoscopes naturally provide a high level of environmental noise suppression. This allows impacts to be readily segmented from any background noise with a simple amplitude threshold.

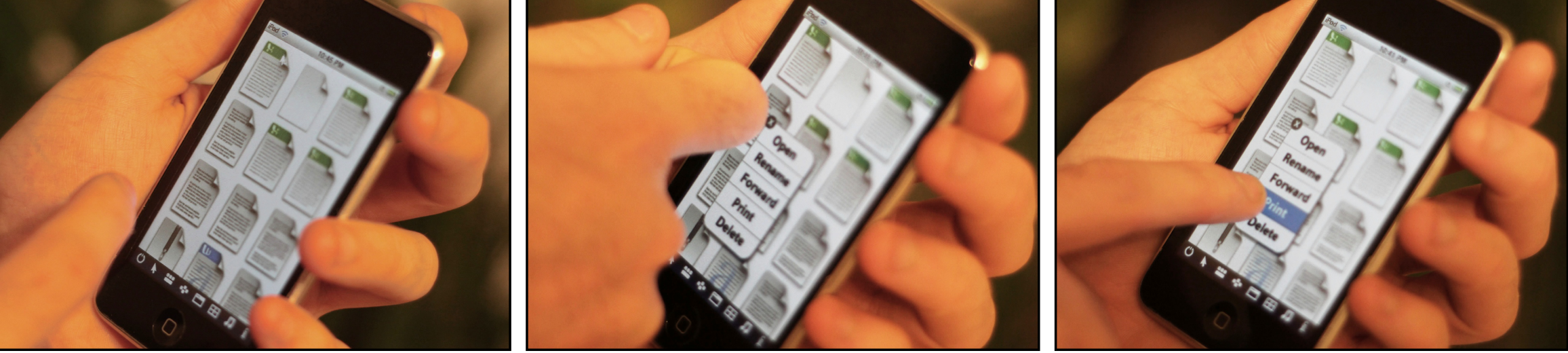

Left: a directory of files; items can be opened or dragged using the traditional finger pad tap. Center: user alt-clicks a file with a knuckle tap, triggering a contextual menu. Right: User pad taps on the print option

To type a primary character, users can use their

finger pad as usual. To type an alt character, a finger tip is

used. Thus, users have access to the entire character set

without having to switch pages. To backspace, the user can

nail tap anywhere on the screen (i.e., no button necessary).

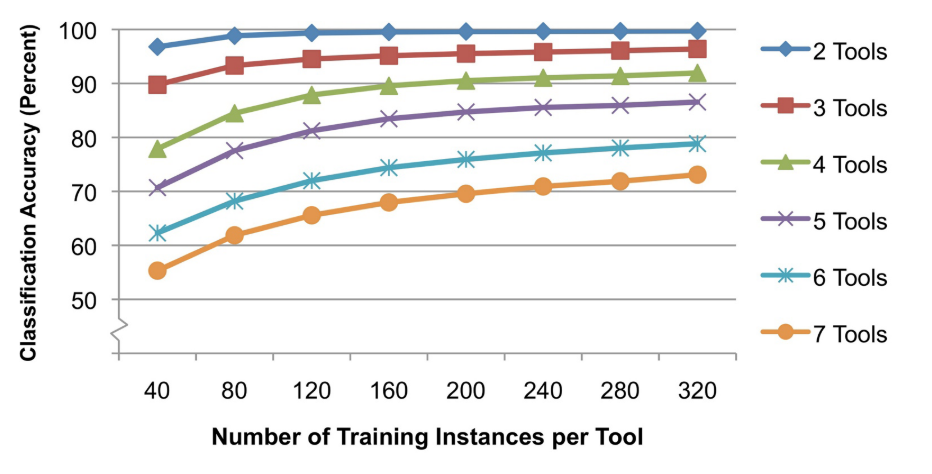

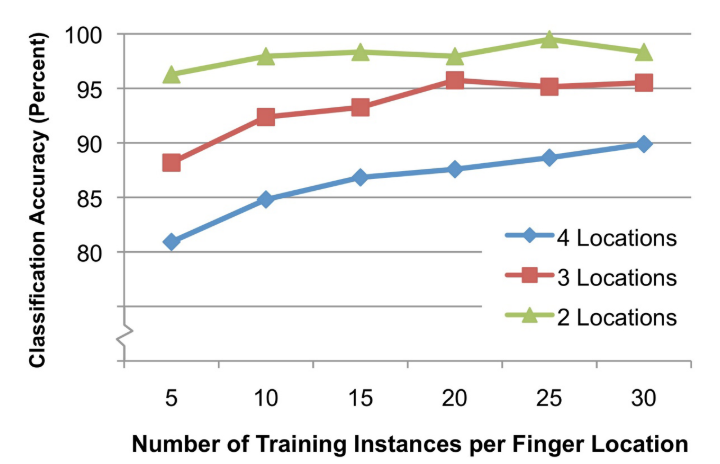

Evaluation

Evaluation

Highlights:

-Powerful interaction opportunities for touch input, especially in mobile devices, where input is extremely constrained

-Identify different sets of passive tools

-Four input types at around 95% accuracy

-Users is not need to be instrumented

Limitations:

-There is one special case where this process breaks down and for which there is no immediate solution – timing collisions. In particular, if two objects strike the surface in sufficiently close temporal proximity, their acoustic signals will not be segmented separately, and therefore not allow for accurate classification

-Stethoscope affixed to the surface of an input-capable display